题外话

我皈依Arch啦!!!!!

让我们一起开ArchLinux创创卡车罢(x

猫猫金句:

这是我在高一更新的最后一篇文章 当然,宁波外国语学校生存指北 也会得到高一最后一次更新(x

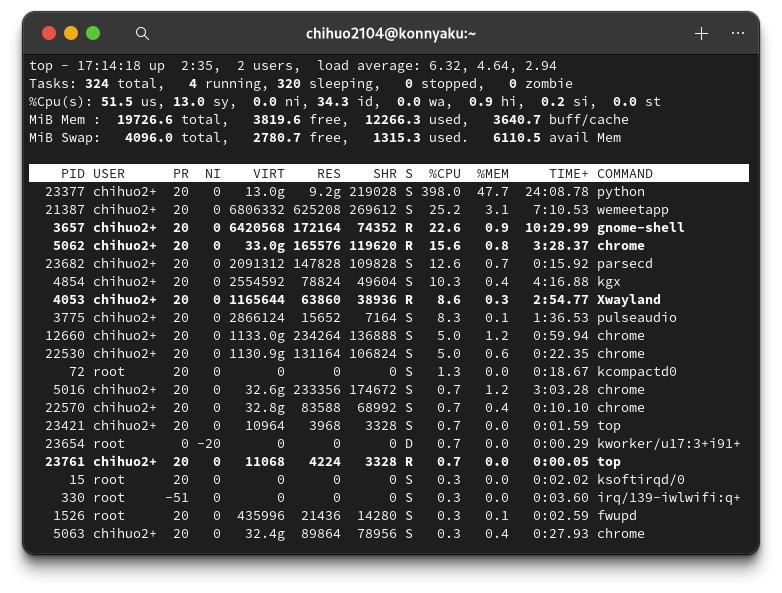

为什么我笔记本运存升20G了?因为我忍不住下手买了个229的kingston,还花了骨折价买了199的500G三星Pro 880(乐

chi的450,没力!

有喜必有悲:我的所有workspace数据全被清了,现在真的是什么都没有了(大悲

chiOpenID的源码也空了 我只好重构。

这件事情告诉我们:备份是很重要的。

mujitogawa大佬您强强!去参加人工智能国赛了!

1. rwkv简述

RWKV是一种新的神经网络模型,它结合了RNN和Transformer的优点,可以实现高效的并行训练和推理 。RWKV的全称是Receptance Weighted Key Value,意思是接纳权重键值 。RWKV是由彭博提出的研究项目,并已经被集成到Hugging Face的transformers库中。

— by edgeGPT

再说一嘴,Peng Bo大大是中国人,还有训练模型是开源的!!!(在这里批评某小软件公司)而且RWKV只要运存8G+就可以运行,只是快慢的区别罢了。

2. 事前准备

你需要:

- 脑子

- 一台运存不低于8G,硬盘存储剩余空间不低于40G的电脑 系统不限,我们这里以Linux系统为例

- git,Python,pip(请自行搜索安装配源) miniconda/canconda也可,要求是你会用

- 预训练模型

- chatRWKV环境

预训练模型可以从这里取得:https://huggingface.co/BlinkDL/rwkv-4-raven/blob/main/RWKV-4-Raven-7B-v11-Eng49%25-Chn49%25-Jpn1%25-Other1%25-20230430-ctx8192.pth 直接点Download即可

这是RWKV4 raven(擅长与人打交道 相当于ChatGPT)的中文模型 14.8G huggingface的网速还是不错的,但是还是得等一个小时(建议aria2下载)

如果你不想用这个预训练模型可以切换其他模型,或者是切换更小的模型:https://huggingface.co/BlinkDL/

各个模型解释如下:

Raven 模型:适合直接聊天,适合 +i 指令。有很多种语言的版本,看清楚用哪个。适合聊天、完成任务、写代码。可以作为任务去写文稿、大纲、故事、诗歌等等,但文笔不如 testNovel 系列模型。

Novel-ChnEng 模型:中英文小说模型,可以用 +gen 生成世界设定(如果会写 prompt,可以控制下文剧情和人物),可以写科幻奇幻。不适合聊天,不适合 +i 指令。

Novel-Chn 模型:纯中文网文模型,只能用 +gen 续写网文(不能生成世界设定等等),但是写网文写得更好(也更小白文,适合写男频女频)。不适合聊天,不适合 +i 指令。

Novel-ChnEng-ChnPro 模型:将 Novel-ChnEng 在高质量作品微调(名著,科幻,奇幻,古典,翻译,等等)。

来自RWKV作者 Peng Bo 的 知乎专栏<https://zhuanlan.zhihu.com/p/618011122>

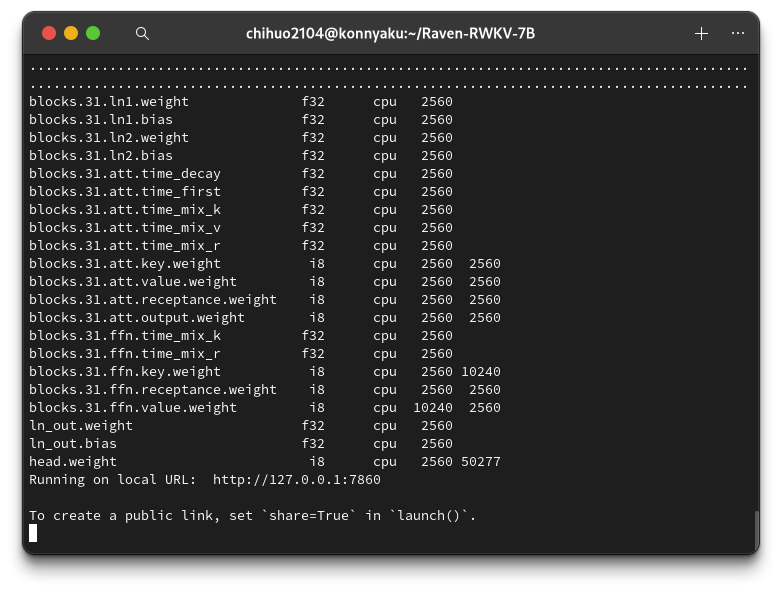

3. 食用

我们需要搭建一个gradio环境

首先我们需要clone来自huggingface的git repo

git clone https://huggingface.co/spaces/BlinkDL/Raven-RWKV-7B

然后再使用pip安装依赖

cd Raven-RWKV-7B

python -m pip install --upgrade pip -i https://pypi.tuna.tsinghua.edu.cn/simple

如果没用施法可以把 -i 以及后面的去掉

成功了之后,编辑app.py

import gradio as gr

import os, gc, copy, torch

from datetime import datetime

from huggingface_hub import hf_hub_download

from pynvml import *

# 有nvidia显卡取消‘#’

#nvmlInit()

#gpu_h = nvmlDeviceGetHandleByIndex(0)

ctx_limit = 1536

os.environ["RWKV_JIT_ON"] = '1'

# 如果有nvidia显卡的话就1

os.environ["RWKV_CUDA_ON"] = '0' # if '1' then use CUDA kernel for seq mode (much faster)

from rwkv.model import RWKV

model = RWKV(model='/path/to/module, strategy='selection')

from rwkv.utils import PIPELINE, PIPELINE_ARGS

pipeline = PIPELINE(model, "20B_tokenizer.json")

def generate_prompt(instruction, input=None):

instruction = instruction.strip().replace('\r

','

').replace('

','

')

input = input.strip().replace('\r

','

').replace('

','

')

if input:

return f"""Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.

# Instruction:

{instruction}

# Input:

{input}

# Response:

"""

else:

return f"""Below is an instruction that describes a task. Write a response that appropriately completes the request.

# Instruction:

{instruction}

# Response:

"""

def evaluate(

instruction,

input=None,

token_count=200,

temperature=1.0,

top_p=0.7,

presencePenalty = 0.1,

countPenalty = 0.1,

):

args = PIPELINE_ARGS(temperature = max(0.2, float(temperature)), top_p = float(top_p),

alpha_frequency = countPenalty,

alpha_presence = presencePenalty,

token_ban = [], # ban the generation of some tokens

token_stop = [0]) # stop generation whenever you see any token here

instruction = instruction.strip().replace('\r

','

').replace('

','

')

input = input.strip().replace('\r

','

').replace('

','

')

ctx = generate_prompt(instruction, input)

all_tokens = []

out_last = 0

out_str = ''

occurrence = {}

state = None

for i in range(int(token_count)):

out, state = model.forward(pipeline.encode(ctx)[-ctx_limit:] if i == 0 else [token], state)

for n in occurrence:

out[n] -= (args.alpha_presence + occurrence[n] * args.alpha_frequency)

token = pipeline.sample_logits(out, temperature=args.temperature, top_p=args.top_p)

if token in args.token_stop:

break

all_tokens += [token]

if token not in occurrence:

occurrence[token] = 1

else:

occurrence[token] += 1

tmp = pipeline.decode(all_tokens[out_last:])

if '\ufffd' not in tmp:

out_str += tmp

yield out_str.strip()

out_last = i + 1

# 有nvidia显卡取消‘#’

#gpu_info = nvmlDeviceGetMemoryInfo(gpu_h)

#print(f'vram {gpu_info.total} used {gpu_info.used} free {gpu_info.free}')

del out

del state

gc.collect()

torch.cuda.empty_cache()

yield out_str.strip()

examples = [

["Tell me about ravens.", "", 300, 1.2, 0.5, 0.4, 0.4],

["Write a python function to mine 1 BTC, with details and comments.", "", 300, 1.2, 0.5, 0.4, 0.4],

["Write a song about ravens.", "", 300, 1.2, 0.5, 0.4, 0.4],

["Explain the following metaphor: Life is like cats.", "", 300, 1.2, 0.5, 0.4, 0.4],

["Write a story using the following information", "A man named Alex chops a tree down", 300, 1.2, 0.5, 0.4, 0.4],

["Generate a list of adjectives that describe a person as brave.", "", 300, 1.2, 0.5, 0.4, 0.4],

["You have $100, and your goal is to turn that into as much money as possible with AI and Machine Learning. Please respond with detailed plan.", "", 300, 1.2, 0.5, 0.4, 0.4],

]

##########################################################################

chat_intro = '''The following is a coherent verbose detailed conversation between <|user|> and an AI girl named <|bot|>.

<|user|>: Hi <|bot|>, Would you like to chat with me for a while?

<|bot|>: Hi <|user|>. Sure. What would you like to talk about? I'm listening.

'''

def user(message, chatbot):

chatbot = chatbot or []

# print(f"User: {message}")

return "", chatbot + [[message, None]]

def alternative(chatbot, history):

if not chatbot or not history:

return chatbot, history

chatbot[-1][1] = None

history[0] = copy.deepcopy(history[1])

return chatbot, history

def chat(

prompt,

user,

bot,

chatbot,

history,

temperature=1.0,

top_p=0.8,

presence_penalty=0.1,

count_penalty=0.1,

):

args = PIPELINE_ARGS(temperature=max(0.2, float(temperature)), top_p=float(top_p),

alpha_frequency=float(count_penalty),

alpha_presence=float(presence_penalty),

token_ban=[], # ban the generation of some tokens

token_stop=[]) # stop generation whenever you see any token here

if not chatbot:

return chatbot, history

message = chatbot[-1][0]

message = message.strip().replace('\r

','

').replace('

','

')

ctx = f"{user}: {message}

{bot}:"

if not history:

prompt = prompt.replace("<|user|>", user.strip())

prompt = prompt.replace("<|bot|>", bot.strip())

prompt = prompt.strip()

prompt = f"

{prompt}

"

out, state = model.forward(pipeline.encode(prompt), None)

history = [state, None, []] # [state, state_pre, tokens]

# print("History reloaded.")

[state, _, all_tokens] = history

state_pre_0 = copy.deepcopy(state)

out, state = model.forward(pipeline.encode(ctx)[-ctx_limit:], state)

state_pre_1 = copy.deepcopy(state) # For recovery

# print("Bot:", end='')

begin = len(all_tokens)

out_last = begin

out_str: str = ''

occurrence = {}

for i in range(300):

if i <= 0:

nl_bias = -float('inf')

elif i <= 30:

nl_bias = (i - 30) * 0.1

elif i <= 130:

nl_bias = 0

else:

nl_bias = (i - 130) * 0.25

out[187] += nl_bias

for n in occurrence:

out[n] -= (args.alpha_presence + occurrence[n] * args.alpha_frequency)

token = pipeline.sample_logits(out, temperature=args.temperature, top_p=args.top_p)

next_tokens = [token]

if token == 0:

next_tokens = pipeline.encode('

')

all_tokens += next_tokens

if token not in occurrence:

occurrence[token] = 1

else:

occurrence[token] += 1

out, state = model.forward(next_tokens, state)

tmp = pipeline.decode(all_tokens[out_last:])

if '\ufffd' not in tmp:

# print(tmp, end='', flush=True)

out_last = begin + i + 1

out_str += tmp

chatbot[-1][1] = out_str.strip()

history = [state, all_tokens]

yield chatbot, history

out_str = pipeline.decode(all_tokens[begin:])

out_str = out_str.replace("\r

", '

').replace('\

', '

')

if '

' in out_str:

break

# State recovery

if f'{user}:' in out_str or f'{bot}:' in out_str:

idx_user = out_str.find(f'{user}:')

idx_user = len(out_str) if idx_user == -1 else idx_user

idx_bot = out_str.find(f'{bot}:')

idx_bot = len(out_str) if idx_bot == -1 else idx_bot

idx = min(idx_user, idx_bot)

if idx < len(out_str):

out_str = f" {out_str[:idx].strip()}

"

tokens = pipeline.encode(out_str)

all_tokens = all_tokens[:begin] + tokens

out, state = model.forward(tokens, state_pre_1)

break

# 有nvidia显卡取消‘#’

#gpu_info = nvmlDeviceGetMemoryInfo(gpu_h)

#print(f'vram {gpu_info.total} used {gpu_info.used} free {gpu_info.free}')

gc.collect()

torch.cuda.empty_cache()

chatbot[-1][1] = out_str.strip()

history = [state, state_pre_0, all_tokens]

yield chatbot, history

##########################################################################

with gr.Blocks(title=title) as demo:

gr.HTML(f"<div style=\"text-align: center;\">

<h1>🐦Raven - {title}</h1>

</div>")

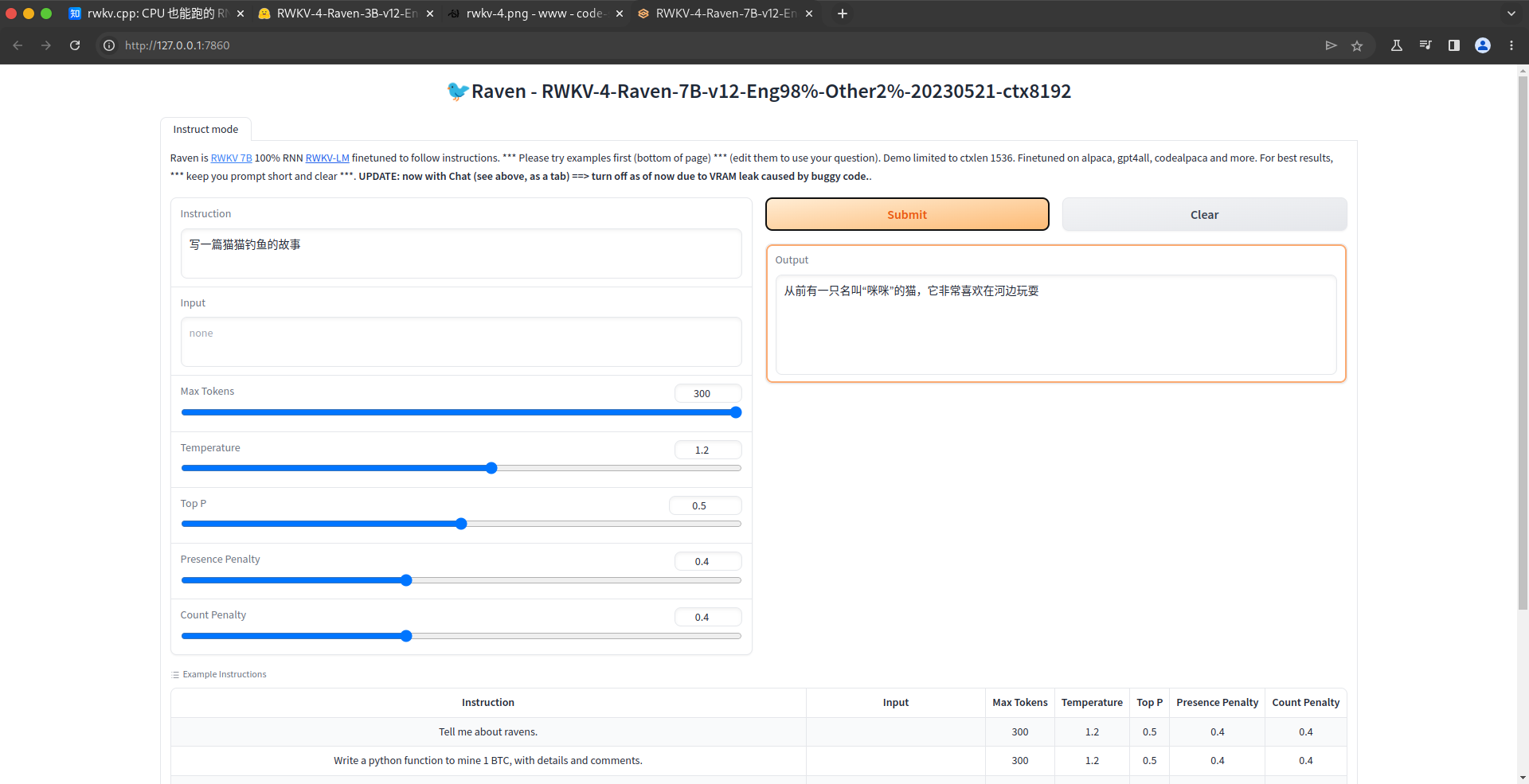

with gr.Tab("Instruct mode"):

gr.Markdown(f"Raven is [RWKV 7B](https://github.com/BlinkDL/ChatRWKV) 100% RNN [RWKV-LM](https://github.com/BlinkDL/RWKV-LM) finetuned to follow instructions. *** Please try examples first (bottom of page) *** (edit them to use your question). Demo limited to ctxlen {ctx_limit}. Finetuned on alpaca, gpt4all, codealpaca and more. For best results, *** keep you prompt short and clear ***. <b>UPDATE: now with Chat (see above, as a tab) ==> turn off as of now due to VRAM leak caused by buggy code.</b>.")

with gr.Row():

with gr.Column():

instruction = gr.Textbox(lines=2, label="Instruction", value="Tell me about ravens.")

input = gr.Textbox(lines=2, label="Input", placeholder="none")

token_count = gr.Slider(10, 300, label="Max Tokens", step=10, value=300)

temperature = gr.Slider(0.2, 2.0, label="Temperature", step=0.1, value=1.2)

top_p = gr.Slider(0.0, 1.0, label="Top P", step=0.05, value=0.5)

presence_penalty = gr.Slider(0.0, 1.0, label="Presence Penalty", step=0.1, value=0.4)

count_penalty = gr.Slider(0.0, 1.0, label="Count Penalty", step=0.1, value=0.4)

with gr.Column():

with gr.Row():

submit = gr.Button("Submit", variant="primary")

clear = gr.Button("Clear", variant="secondary")

output = gr.Textbox(label="Output", lines=5)

data = gr.Dataset(components=[instruction, input, token_count, temperature, top_p, presence_penalty, count_penalty], samples=examples, label="Example Instructions", headers=["Instruction", "Input", "Max Tokens", "Temperature", "Top P", "Presence Penalty", "Count Penalty"])

submit.click(evaluate, [instruction, input, token_count, temperature, top_p, presence_penalty, count_penalty], [output])

clear.click(lambda: None, [], [output])

data.click(lambda x: x, [data], [instruction, input, token_count, temperature, top_p, presence_penalty, count_penalty])

# with gr.Tab("Chat (Experimental - Might be buggy - use ChatRWKV for reference)"):

# gr.Markdown(f'''<b>*** The length of response is restricted in this demo. Use ChatRWKV for longer generations. ***</b> Say "go on" or "continue" can sometimes continue the response. If you'd like to edit the scenario, make sure to follow the exact same format: empty lines between (and only between) different speakers. Changes only take effect after you press [Clear]. <b>The default "Bob" & "Alice" names work the best.</b>''', label="Description")

# with gr.Row():

# with gr.Column():

# chatbot = gr.Chatbot()

# state = gr.State()

# message = gr.Textbox(label="Message", value="Write me a python code to land on moon.")

# with gr.Row():

# send = gr.Button("Send", variant="primary")

# alt = gr.Button("Alternative", variant="secondary")

# clear = gr.Button("Clear", variant="secondary")

# with gr.Column():

# with gr.Row():

# user_name = gr.Textbox(lines=1, max_lines=1, label="User Name", value="Bob")

# bot_name = gr.Textbox(lines=1, max_lines=1, label="Bot Name", value="Alice")

# prompt = gr.Textbox(lines=10, max_lines=50, label="Scenario", value=chat_intro)

# temperature = gr.Slider(0.2, 2.0, label="Temperature", step=0.1, value=1.2)

# top_p = gr.Slider(0.0, 1.0, label="Top P", step=0.05, value=0.5)

# presence_penalty = gr.Slider(0.0, 1.0, label="Presence Penalty", step=0.1, value=0.4)

# count_penalty = gr.Slider(0.0, 1.0, label="Count Penalty", step=0.1, value=0.4)

# chat_inputs = [

# prompt,

# user_name,

# bot_name,

# chatbot,

# state,

# temperature,

# top_p,

# presence_penalty,

# count_penalty

# ]

# chat_outputs = [chatbot, state]

# message.submit(user, [message, chatbot], [message, chatbot], queue=False).then(chat, chat_inputs, chat_outputs)

# send.click(user, [message, chatbot], [message, chatbot], queue=False).then(chat, chat_inputs, chat_outputs)

# alt.click(alternative, [chatbot, state], [chatbot, state], queue=False).then(chat, chat_inputs, chat_outputs)

# clear.click(lambda: ([], None, ""), [], [chatbot, state, message], queue=False)

demo.queue(concurrency_count=1, max_size=10)

demo.launch(share=False)

上面会开启一个webserver,如果不能正常运行,可以使用6GB模型:https://huggingface.co/BlinkDL/rwkv-4-raven/blob/main/RWKV-4-Raven-3B-v12-Eng49%25-Chn49%25-Jpn1%25-Other1%25-20230527-ctx4096.pth

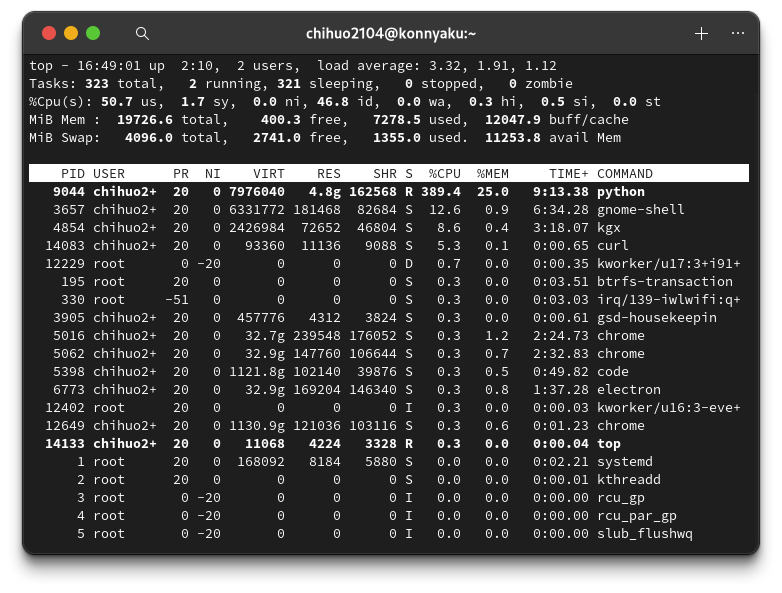

在我这里6GB模型占用了10GB的运存,生成速度也不算理想,14G电脑直接炸了

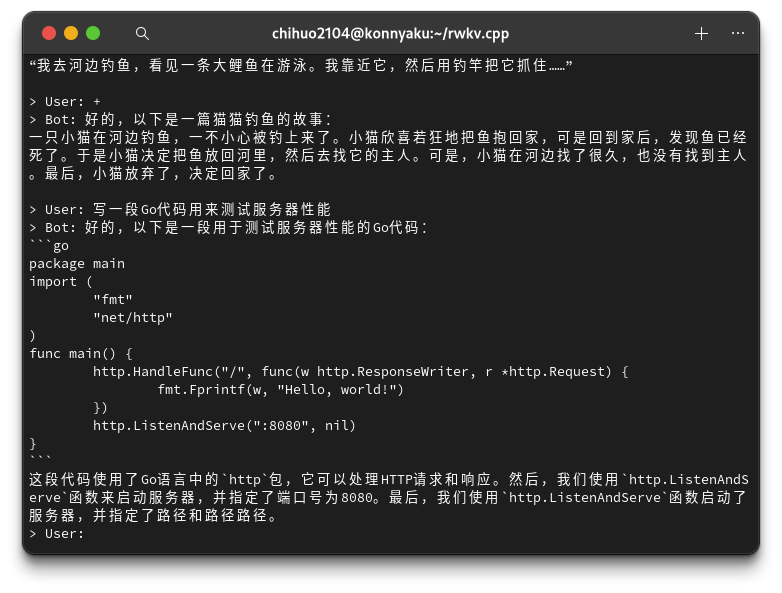

4. rwkv.cpp救场

如果rwkv上面的代码没办法在你的电脑上运行,那么你也可以考虑一下使用rwkv.cpp进行优化,减少占用。

先clone下rwkv.cpp的仓库:

git clone --recursive https://github.com/saharNooby/rwkv.cpp.git

cd rwkv.cpp

然后转换模型,如果出现错误请进入rwkv文件夹安装依赖项

python rwkv/convert_pytorch_to_ggml.py /path/to/file ./rwkv.cpp-7B.bin float16

如果找不到自己的文件地址,windows11可以右键文件,复制文件路径。

在量化模型前,需要安装依赖库,在GitHub Releases

请把文件拷贝到根目录/rwkv/中

然后量化模型:

python rwkv/quantize.py ./rwkv.cpp-7B.bin ./rwkv.cpp-7B-Q4_1_O.bin 4

最后可以食用啦

python rwkv/chat_with_bot.py ./rwkv.cpp-7B-Q4_1_O.bin

实测占用5-6G运存,生成速度也能接受(2-3s一个中文字)

4. 总结

RWKV总体上是一个很不错的语言模型,买到了1660Ti就训练你啦(x

Preview: